Tutorial: Build a RAG Application Using LangChain

LangChain is a powerful framework that simplifies the integration of large language models (LLMs) with document retrieval, allowing developers to create more accurate and contextually aware applications. This tutorial will guide you through the steps to build a Retrieval-Augmented Generation (RAG) application using LangChain.

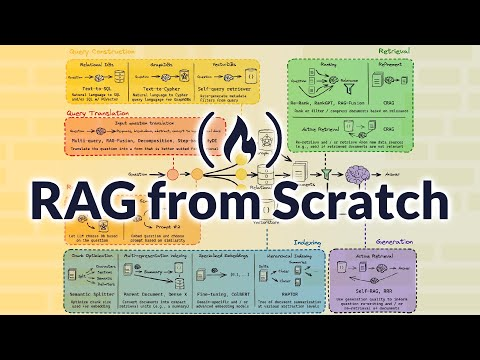

What is Retrieval-Augmented Generation (RAG)?

RAG (Retrieval-Augmented Generation) is a powerful architecture combining retrieval and language generation. It improves response accuracy by retrieving relevant documents from a dataset before feeding them into a language model to generate better, context-rich outputs. Common use cases include document search, question answering, and customer support automation.

Why LangChain for RAG Applications?

LangChain simplifies RAG application development by providing an integrated framework that links language models (like GPT) with external data sources, such as databases and document repositories. This allows developers to create intelligent, conversational systems without manually stitching together multiple components.

Setting Up the Development Environment

Installing Dependencies

To build a RAG application with LangChain, install the necessary libraries:

pip install langchain faiss-cpu transformers

These packages will help integrate language models and manage document retrieval.

Configuring LangChain

LangChain provides a modular architecture that separates retrieval, generation, and orchestration components. After installation, configure the framework to interact with the retrieval system and language models.

Understanding the RAG Workflow in LangChain

Data Retrieval Pipeline

The retrieval component in RAG applications fetches relevant documents based on user queries. Using tools like FAISS (Facebook AI Similarity Search), LangChain indexes documents, enabling fast, efficient retrieval.

Augmenting with LLMs

Once documents are retrieved, they are passed to a language model, such as GPT, which processes the content and generates coherent responses based on the input query and retrieved data.

Conclusion

Building a RAG application using LangChain provides developers with a powerful tool to enhance their AI applications. By combining fast, efficient document retrieval with state-of-the-art language models, developers can create intelligent systems that generate accurate, context-aware responses. LangChain’s modular architecture simplifies the entire process, making it an excellent choice for developers interested in NLP and AI-driven applications.